Pengguna:Kekavigi/bak pasir: Perbedaan antara revisi

k ~ |

k ~ |

||

| Baris 1: | Baris 1: | ||

{{Short description|Perubahan koordinat di aljabar linear}} |

|||

Kesetaraan matriks |

|||

{{multiple image |

|||

In [[linear algebra]], two rectangular ''m''-by-''n'' [[Matrix (mathematics)|matrices]] ''A'' and ''B'' are called '''equivalent''' if |

|||

| align = right |

|||

| direction = vertical |

|||

| footer = |

|||

| width1 = 290 |

|||

| image1 = 3d basis transformation.svg |

|||

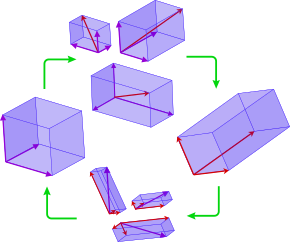

| caption1 = A [[linear combination]] of one basis of vectors (purple) obtains new vectors (red). If they are [[linearly independent]], these form a new basis. The linear combinations relating the first basis to the other extend to a [[linear transformation]], called the change of basis. |

|||

| width2 = 122 |

|||

| image2 = 3d two bases same vector.svg |

|||

| caption2 = A vector represented by two different bases (purple and red arrows). |

|||

}} |

|||

Dalam [[aljabar linear]], |

|||

: <math>B = Q^{-1} A P</math> |

|||

for some [[Invertible matrix|invertible]] ''n''-by-''n'' matrix ''P'' and some invertible ''m''-by-''m'' matrix ''Q''. Equivalent matrices represent the same [[Linear map|linear transformation]] ''V'' → ''W'' under two different choices of a pair of [[Basis (linear algebra)|bases]] of ''V'' and ''W'', with ''P'' and ''Q'' being the [[change of basis]] matrices in ''V'' and ''W'' respectively. |

|||

sebarang [[Basis (aljabar linear)|basis terurut]] dari sebarang [[ruang vektor]] dengan [[dimensi]] <math>n</math> |

|||

The notion of equivalence should not be confused with that of [[Similar matrix|similarity]], which is only defined for square matrices, and is much more restrictive (similar matrices are certainly equivalent, but equivalent square matrices need not be similar). That notion corresponds to matrices representing the same [[endomorphism]] ''V'' → ''V'' under two different choices of a ''single'' basis of ''V'', used both for initial vectors and their images. |

|||

sebarang vektor pada ruang vektor dinyatakan secara unik oleh [[vektor koordinat]] |

|||

== Properties == |

|||

Matrix equivalence is an [[equivalence relation]] on the space of rectangular matrices. |

|||

--- |

|||

For two rectangular matrices of the same size, their equivalence can also be characterized by the following conditions |

|||

basis terurut memungkinkan setiap/sembarang/semua elemen pada sebarang ruang vektor dim-n dinyatakan oleh vektor koordinat, yakni suatu [[barisan]] n [[Skalar (matematika)|skalar]] yang disebut [[Sistem koordinat|koordinat]]. |

|||

* The matrices can be transformed into one another by a combination of [[Elementary row operation|elementary row and column operations]]. |

|||

* Two matrices are equivalent if and only if they have the same [[Rank of a matrix|rank]]. |

|||

--- |

|||

If matrices are row equivalent then they are also matrix equivalent. However, the converse does not hold; matrices that are matrix equivalent are not necessarily row equivalent. This makes matrix equivalence a generalization of row equivalence. <ref name=":0">{{Cite book|last=Hefferon|first=Jim|url=https://hefferon.net/linearalgebra/|title=Linear Algebra|edition=4th|pages=270-272|language=en|chapter=}}{{Creative Commons text attribution notice|cc=bysa3|from this source=yes}}</ref> |

|||

basis sebagai acuan arah |

|||

== Canonical form == |

|||

The [[Rank of a matrix|rank]] property yields an intuitive [[canonical form]] for matrices of the equivalence class of rank <math>k</math> as |

|||

--- |

|||

<math> |

|||

\begin{pmatrix} |

|||

1 & 0 & 0 & & \cdots & & 0 \\ |

|||

0 & 1 & 0 & & \cdots & & 0 \\ |

|||

0 & 0 & \ddots & & & & 0\\ |

|||

\vdots & & & 1 & & & \vdots \\ |

|||

& & & & 0 & & \\ |

|||

& & & & & \ddots & \\ |

|||

0 & & & \cdots & & & 0 |

|||

\end{pmatrix} |

|||

</math>, |

|||

<dua basis berbeda ''considered''>, vektor koordinat yang menyatakan elemen <math>v</math> menurut/atas basis pertama, umumnya berbeda dengan vektor koordinat yang menyatakan elemen tersebut --- basis yang lain. |

|||

where the number of <math>1</math>s on the diagonal is equal to <math>k</math>. This is a special case of the [[Smith normal form]], which generalizes this concept on vector spaces to [[Free module|free modules]] over [[Principal ideal domain|principal ideal domains]]. Thus:<blockquote>'''Theorem''': Any '''''m'''''x'''''n''''' matrix of rank '''''k''''' is matrix equivalent to the '''''m'''''x'''''n''''' matrix that is all zeroes except that the first '''''k''''' diagonal entries are ones. <ref name=":0" /> '''Corollary''': Matrix equivalent classes are characterized by rank: two same-sided matrixes are matrix equivalent if and only if they have the same rank. <ref name=":0" /></blockquote> |

|||

--- |

|||

== 2x2 Matrices == |

|||

2x2 matrices only have three possible ranks: zero, one, or two. This means all 2x2 matrices fit into one of three matrix equivalent classes:<ref name=":0" /> |

|||

<math> |

|||

\begin{pmatrix} |

|||

0 & 0 \\ |

|||

0 & 0\\ |

|||

\end{pmatrix} |

|||

</math> , <math> |

|||

\begin{pmatrix} |

|||

1 & 0 \\ |

|||

0 & 0\\ |

|||

\end{pmatrix} |

|||

</math> , <math> |

|||

\begin{pmatrix} |

|||

1 & 0 \\ |

|||

0 & 1 \\ |

|||

\end{pmatrix} |

|||

</math> |

|||

This means all 2x2 matrices are equivalent to one of these matrices. There is only one zero rank matrix, but the other two classes have infinitely many members; The representative matrices above are the simplest matrix for each class. |

|||

In [[mathematics]], an [[ordered basis]] of a [[vector space]] of finite [[Dimension (vector space)|dimension]] allows representing uniquely any element of the vector space by a [[coordinate vector]], which is a [[Finite sequence|sequence]] of {{mvar|n}} [[Scalar (mathematics)|scalars]] called [[coordinates]]. If two different bases are considered, the coordinate vector that represents a vector on one basis is, in general, different from the coordinate vector that represents {{mvar|v}} on the other basis. A '''change of basis''' consists of converting every assertion expressed in terms of coordinates relative to one basis into an assertion expressed in terms of coordinates relative to the other basis.<ref>{{harvtxt|Anton|1987|pp=221–237}}</ref><ref>{{harvtxt|Beauregard|Fraleigh|1973|pp=240–243}}</ref><ref>{{harvtxt|Nering|1970|pp=50–52}}</ref> |

|||

== Matrix Similarity == |

|||

Matrix similarity is a special case of matrix equivalence. If two matrices are similar then they are also equivalent. However, the converse is not true.<ref>{{Cite book|last=Hefferon|first=Jim|url=https://hefferon.net/linearalgebra/|title=Linear Algebra|edition=4th|page=405|language=en}}{{Creative Commons text attribution notice|cc=bysa3|from this source=yes}}</ref> For example these two matrices are equivalent but not similar: |

|||

Such a conversion results from the ''change-of-basis formula'' which expresses the coordinates relative to one basis in terms of coordinates relative to the other basis. Using [[Matrix (mathematics)|matrices]], this formula can be written |

|||

<math> |

|||

\begin{pmatrix} |

|||

: <math>\mathbf x_\mathrm{old} = A \,\mathbf x_\mathrm{new},</math> |

|||

1 & 0 \\ |

|||

0 & 1 \\ |

|||

where "old" and "new" refer respectively to the firstly defined basis and the other basis, <math>\mathbf x_\mathrm{old}</math> and <math>\mathbf x_\mathrm{new}</math> are the [[Column vector|column vectors]] of the coordinates of the same vector on the two bases, and <math>A</math> is the '''change-of-basis matrix''' (also called '''transition matrix'''), which is the matrix whose columns are the coordinates of the new [[Basis vector|basis vectors]] on the old basis. |

|||

\end{pmatrix} |

|||

</math> , <math> |

|||

This article deals mainly with finite-dimensional vector spaces. However, many of the principles are also valid for infinite-dimensional vector spaces. |

|||

\begin{pmatrix} |

|||

1 & 2 \\ |

|||

== Change of basis formula == |

|||

0 & 3 \\ |

|||

Let <math>B_\mathrm {old}=(v_1, \ldots, v_n)</math> be a basis of a [[finite-dimensional vector space]] {{mvar|V}} over a [[Field (mathematics)|field]] {{mvar|F}}.{{efn|Although a basis is generally defined as a set of vectors (for example, as a spanning set that is linearly independent), the [[tuple]] notation is convenient here, since the indexing by the first positive integers makes the basis an [[ordered basis]].}} |

|||

\end{pmatrix} |

|||

</math> |

|||

For {{math|1=''j'' = 1, ..., ''n''}}, one can define a vector {{math|''w''{{sub|''j''}}}} by its coordinates <math>a_{i,j}</math> over <math>B_\mathrm {old}\colon</math> |

|||

: <math>w_j=\sum_{i=1}^n a_{i,j}v_i.</math> |

|||

Let |

|||

: <math>A=\left(a_{i,j}\right)_{i,j}</math> |

|||

be the [[Matrix (mathematics)|matrix]] whose {{mvar|j}}th column is formed by the coordinates of {{math|''w''{{sub|''j''}}}}. (Here and in what follows, the index {{mvar|i}} refers always to the rows of {{mvar|A}} and the <math>v_i,</math> while the index {{mvar|j}} refers always to the columns of {{mvar|A}} and the <math>w_j;</math> such a convention is useful for avoiding errors in explicit computations.) |

|||

Setting <math>B_\mathrm {new}=(w_1, \ldots, w_n),</math> one has that <math>B_\mathrm {new}</math> is a basis of {{mvar|V}} if and only if the matrix {{mvar|A}} is [[Invertible matrix|invertible]], or equivalently if it has a nonzero [[determinant]]. In this case, {{mvar|A}} is said to be the ''change-of-basis matrix'' from the basis <math>B_\mathrm {old}</math> to the basis <math>B_\mathrm {new}.</math><ANTON><FRIEDBERG?> |

|||

Given a vector <math>z\in V,</math> let <math>(x_1, \ldots, x_n) </math> be the coordinates of <math>z</math> over <math>B_\mathrm {old},</math> and <math>(y_1, \ldots, y_n) </math> its coordinates over <math>B_\mathrm {new};</math> that is |

|||

: <math>z=\sum_{i=1}^nx_iv_i = \sum_{j=1}^ny_jw_j.</math> |

|||

(One could take the same summation index for the two sums, but choosing systematically the indexes {{mvar|i}} for the old basis and {{mvar|j}} for the new one makes clearer the formulas that follows, and helps avoiding errors in proofs and explicit computations.) |

|||

The ''change-of-basis formula'' expresses the coordinates over the old basis in term of the coordinates over the new basis. With above notation, it is |

|||

: <math>x_i = \sum_{j=1}^n a_{i,j}y_j\qquad\text{for } i=1, \ldots, n.</math> |

|||

In terms of matrices, the change of basis formula is |

|||

: <math>\mathbf x = A\,\mathbf y,</math> |

|||

where <math>\mathbf x</math> and <math>\mathbf y</math> are the column vectors of the coordinates of {{mvar|z}} over <math>B_\mathrm {old}</math> and <math>B_\mathrm {new},</math> respectively. |

|||

''Proof:'' Using the above definition of the change-of basis matrix, one has |

|||

: <math>\begin{align} |

|||

z&=\sum_{j=1}^n y_jw_j\\ |

|||

&=\sum_{j=1}^n \left(y_j\sum_{i=1}^n a_{i,j}v_i\right)\\ |

|||

&=\sum_{i=1}^n \left(\sum_{j=1}^n a_{i,j} y_j \right) v_i. |

|||

\end{align}</math> |

|||

As <math>z=\textstyle \sum_{i=1}^n x_iv_i,</math> the change-of-basis formula results from the uniqueness of the decomposition of a vector over a basis. |

|||

== Example == |

|||

Consider the [[Euclidean vector space]] <math>\mathbb R^2.</math> Its [[standard basis]] consists of the vectors <math>v_1= (1,0)</math> and <math>v_2= (0,1).</math> If one [[Rotation (mathematics)|rotates]] them by an angle of {{mvar|t}}, one gets a ''new basis'' formed by <math>w_1=(\cos t, \sin t)</math> and <math>w_2=(-\sin t, \cos t).</math> |

|||

So, the change-of-basis matrix is <math>\begin{bmatrix} |

|||

\cos t& -\sin t\\ |

|||

\sin t& \cos t |

|||

\end{bmatrix}.</math> |

|||

The change-of-basis formula asserts that, if <math>y_1, y_2</math> are the new coordinates of a vector <math>(x_1, x_2),</math> then one has |

|||

: <math>\begin{bmatrix}x_1\\x_2\end{bmatrix}=\begin{bmatrix} |

|||

\cos t& -\sin t\\ |

|||

\sin t& \cos t |

|||

\end{bmatrix}\,\begin{bmatrix}y_1\\y_2\end{bmatrix}.</math> |

|||

That is, |

|||

: <math>x_1=y_1\cos t - y_2\sin t \qquad\text{and}\qquad x_2=y_1\sin t + y_2\cos t.</math> |

|||

This may be verified by writing |

|||

: <math>\begin{align} |

|||

x_1v_1+x_2v_2 &= (y_1\cos t - y_2\sin t) v_1 + (y_1\sin t + y_2\cos t) v_2\\ |

|||

&= y_1 (\cos (t) v_1 + \sin(t)v_2) + y_2 (-\sin(t) v_1 +\cos(t) v_2)\\ |

|||

&=y_1w_1+y_2w_2. |

|||

\end{align}</math> |

|||

== In terms of linear maps == |

|||

Normally, a [[Matrix (mathematics)|matrix]] represents a [[linear map]], and the product of a matrix and a column vector represents the [[function application]] of the corresponding linear map to the vector whose coordinates form the column vector. The change-of-basis formula is a specific case of this general principle, although this is not immediately clear from its definition and proof. |

|||

When one says that a matrix ''represents'' a linear map, one refers implicitly to [[Basis (linear algebra)|bases]] of implied vector spaces, and to the fact that the choice of a basis induces an [[Linear isomorphism|isomorphism]] between a vector space and {{math|''F''{{sup|''n''}}}}, where {{mvar|F}} is the field of scalars. When only one basis is considered for each vector space, it is worth to leave this isomorphism implicit, and to work [[up to]] an isomorphism. As several bases of the same vector space are considered here, a more accurate wording is required. |

|||

Let {{mvar|F}} be a [[Field (mathematics)|field]], the set <math>F^n</math> of the [[Tuple|{{mvar|n}}-tuples]] is a {{mvar|F}}-vector space whose addition and scalar multiplication are defined component-wise. Its [[standard basis]] is the basis that has as its {{mvar|i}}th element the tuple with all components equal to {{math|0}} except the {{mvar|i}}th that is {{math|1}}. |

|||

A basis <math>B=(v_1, \ldots, v_n)</math> of a {{mvar|F}}-vector space {{mvar|V}} defines a [[linear isomorphism]] <math>\phi\colon F^n\to V</math> by |

|||

: <math>\phi(x_1,\ldots,x_n)=\sum_{i=1}^n x_i v_i.</math> |

|||

Conversely, such a linear isomorphism defines a basis, which is the image by <math>\phi</math> of the standard basis of <math>F^n.</math> |

|||

Let <math>B_\mathrm {old}=(v_1, \ldots, v_n)</math> be the "old basis" of a change of basis, and <math>\phi_\mathrm {old}</math> the associated isomorphism. Given a change-of basis matrix {{mvar|A}}, one could consider it the matrix of an [[endomorphism]] <math>\psi_A</math> of <math>F^n.</math> Finally, define |

|||

: <math>\phi_\mathrm{new}=\phi_\mathrm{old}\circ\psi_A</math> |

|||

(where <math>\circ</math> denotes [[function composition]]), and |

|||

: <math>B_\mathrm{new}= \phi_\mathrm{new}(\phi_\mathrm{old}^{-1}(B_\mathrm{old})). </math> |

|||

A straightforward verification shows that this definition of <math>B_\mathrm{new}</math> is the same as that of the preceding section. |

|||

Now, by composing the equation <math>\phi_\mathrm{new}=\phi_\mathrm{old}\circ\psi_A</math> with <math>\phi_\mathrm{old}^{-1}</math> on the left and <math>\phi_\mathrm{new}^{-1}</math> on the right, one gets |

|||

: <math>\phi_\mathrm{old}^{-1} = \psi_A \circ \phi_\mathrm{new}^{-1}.</math> |

|||

It follows that, for <math>v\in V,</math> one has |

|||

: <math>\phi_\mathrm{old}^{-1}(v)= \psi_A(\phi_\mathrm{new}^{-1}(v)),</math> |

|||

which is the change-of-basis formula expressed in terms of linear maps instead of coordinates. |

|||

== Function defined on a vector space == |

|||

A [[Function (mathematics)|function]] that has a vector space as its [[Domain of a function|domain]] is commonly specified as a [[multivariate function]] whose variables are the coordinates on some basis of the vector on which the function is [[Function application|applied]]. |

|||

When the basis is changed, the [[Expression (mathematics)|expression]] of the function is changed. This change can be computed by substituting the "old" coordinates for their expressions in terms of the "new" coordinates. More precisely, if {{math|''f''('''x''')}} is the expression of the function in terms of the old coordinates, and if {{math|'''x''' {{=}} ''A'''''y'''}} is the change-of-base formula, then {{math|''f''(''A'''''y''')}} is the expression of the same function in terms of the new coordinates. |

|||

The fact that the change-of-basis formula expresses the old coordinates in terms of the new one may seem unnatural, but appears as useful, as no [[matrix inversion]] is needed here. |

|||

As the change-of-basis formula involves only [[Linear function|linear functions]], many function properties are kept by a change of basis. This allows defining these properties as properties of functions of a variable vector that are not related to any specific basis. So, a function whose domain is a vector space or a subset of it is |

|||

* a linear function, |

|||

* a [[polynomial function]], |

|||

* a [[continuous function]], |

|||

* a [[differentiable function]], |

|||

* a [[smooth function]], |

|||

* an [[analytic function]], |

|||

if the multivariate function that represents it on some basis—and thus on every basis—has the same property. |

|||

This is specially useful in the theory of [[Manifold|manifolds]], as this allows extending the concepts of continuous, differentiable, smooth and analytic functions to functions that are defined on a manifold. |

|||

== Linear maps == |

|||

Consider a [[linear map]] {{math|''T'': ''W'' → ''V''}} from a [[vector space]] {{mvar|W}} of dimension {{mvar|n}} to a vector space {{mvar|V}} of dimension {{mvar|m}}. It is represented on "old" bases of {{mvar|V}} and {{mvar|W}} by a {{math|''m''×''n''}} matrix {{mvar|M}}. A change of bases is defined by an {{math|''m''×''m''}} change-of-basis matrix {{mvar|P}} for {{mvar|V}}, and an {{math|''n''×''n''}} change-of-basis matrix {{mvar|Q}} for {{mvar|W}}. |

|||

On the "new" bases, the matrix of {{mvar|T}} is |

|||

: <math>P^{-1}MQ.</math> |

|||

This is a straightforward consequence of the change-of-basis formula. |

|||

== Endomorphisms == |

|||

[[Endomorphism|Endomorphisms]], are linear maps from a vector space {{mvar|V}} to itself. For a change of basis, the formula of the preceding section applies, with the same change-of-basis matrix on both sides of the formula. That is, if {{mvar|M}} is the [[square matrix]] of an endomorphism of {{mvar|V}} over an "old" basis, and {{mvar|P}} is a change-of-basis matrix, then the matrix of the endomorphism on the "new" basis is |

|||

: <math>P^{-1}MP.</math> |

|||

As every [[invertible matrix]] can be used as a change-of-basis matrix, this implies that two matrices are [[Similar matrices|similar]] if and only if they represent the same endomorphism on two different bases. |

|||

== Bilinear forms == |

|||

A ''[[bilinear form]]'' on a vector space ''V'' over a [[Field (mathematics)|field]] {{mvar|F}} is a function {{math|''V'' × ''V'' → F}} which is [[Linear map|linear]] in both arguments. That is, {{math|''B'' : ''V'' × ''V'' → F}} is bilinear if the maps <math>v \mapsto B(v, w)</math> and <math>v \mapsto B(w, v)</math> are linear for every fixed <math>w\in V.</math> |

|||

The matrix {{math|'''B'''}} of a bilinear form {{mvar|B}} on a basis <math>(v_1, \ldots, v_n) </math> (the "old" basis in what follows) is the matrix whose entry of the {{mvar|i}}th row and {{mvar|j}}th column is {{math|''B''(''i'', ''j'')}}. It follows that if {{math|'''v'''}} and {{math|'''w'''}} are the column vectors of the coordinates of two vectors {{mvar|v}} and {{mvar|w}}, one has |

|||

: <math>B(v, w)=\mathbf v^{\mathsf T}\mathbf B\mathbf w,</math> |

|||

where <math>\mathbf v^{\mathsf T}</math> denotes the [[transpose]] of the matrix {{math|'''v'''}}. |

|||

If {{mvar|P}} is a change of basis matrix, then a straightforward computation shows that the matrix of the bilinear form on the new basis is |

|||

: <math>P^{\mathsf T}\mathbf B P.</math> |

|||

A [[symmetric bilinear form]] is a bilinear form {{mvar|B}} such that <math>B(v,w)=B(w,v)</math> for every {{mvar|v}} and {{mvar|w}} in {{mvar|V}}. It follows that the matrix of {{mvar|B}} on any basis is [[Symmetric matrix|symmetric]]. This implies that the property of being a symmetric matrix must be kept by the above change-of-base formula. One can also check this by noting that the transpose of a matrix product is the product of the transposes computed in the reverse order. In particular, |

|||

: <math>(P^{\mathsf T}\mathbf B P)^{\mathsf T} = P^{\mathsf T}\mathbf B^{\mathsf T} P,</math> |

|||

and the two members of this equation equal <math>P^{\mathsf T} \mathbf B P</math> if the matrix {{math|'''B'''}} is symmetric. |

|||

If the [[Characteristic (algebra)|characteristic]] of the ground field {{mvar|F}} is not two, then for every symmetric bilinear form there is a basis for which the matrix is [[Diagonal matrix|diagonal]]. Moreover, the resulting nonzero entries on the diagonal are defined up to the multiplication by a square. So, if the ground field is the field <math>\mathbb R</math> of the [[Real number|real numbers]], these nonzero entries can be chosen to be either {{math|1}} or {{math|–1}}. [[Sylvester's law of inertia]] is a theorem that asserts that the numbers of {{math|1}} and of {{math|–1}} depends only on the bilinear form, and not of the change of basis. |

|||

Symmetric bilinear forms over the reals are often encountered in [[geometry]] and [[physics]], typically in the study of [[Quadric|quadrics]] and of the [[inertia]] of a [[rigid body]]. In these cases, [[orthonormal bases]] are specially useful; this means that one generally prefer to restrict changes of basis to those that have an [[Orthogonal matrix|orthogonal]] change-of-base matrix, that is, a matrix such that <math>P^{\mathsf T}=P^{-1}.</math> Such matrices have the fundamental property that the change-of-base formula is the same for a symmetric bilinear form and the endomorphism that is represented by the same symmetric matrix. The [[Spectral theorem]] asserts that, given such a symmetric matrix, there is an orthogonal change of basis such that the resulting matrix (of both the bilinear form and the endomorphism) is a diagonal matrix with the [[eigenvalues]] of the initial matrix on the diagonal. It follows that, over the reals, if the matrix of an endomorphism is symmetric, then it is [[Diagonalizable matrix|diagonalizable]]. |

|||

== See also == |

== See also == |

||

* [[Active and passive transformation]] |

|||

* [[Row equivalence]] |

|||

* [[Covariance and contravariance of vectors]] |

|||

* [[Matrix congruence]] |

|||

* [[Integral transform]], the continuous analogue of change of basis. |

|||

== Notes == |

|||

{{notelist}} |

|||

== References == |

== References == |

||

{{Reflist}} |

|||

<references /> |

|||

{{Matrix classes}} |

|||

== Bibliography == |

|||

* {{citation|last1=Anton|first1=Howard|year=1987|isbn=0-471-84819-0|title=Elementary Linear Algebra|edition=5th|publisher=[[John Wiley & Sons|Wiley]]|location=New York}} |

|||

* {{citation|last1=Beauregard|first1=Raymond A.|last2=Fraleigh|first2=John B.|title=A First Course In Linear Algebra: with Optional Introduction to Groups, Rings, and Fields|location=Boston|publisher=[[Houghton Mifflin Company]]|year=1973|isbn=0-395-14017-X|url-access=registration|url=https://archive.org/details/firstcourseinlin0000beau}} |

|||

* {{citation|last1=Nering|first1=Evar D.|title=Linear Algebra and Matrix Theory|edition=2nd|location=New York|publisher=[[John Wiley & Sons|Wiley]]|year=1970|lccn=76091646}} |

|||

== External links == |

|||

* [http://ocw.mit.edu/courses/mathematics/18-06-linear-algebra-spring-2010/video-lectures/lecture-31-change-of-basis-image-compression/ MIT Linear Algebra Lecture on Change of Basis], from MIT OpenCourseWare |

|||

* [https://www.youtube.com/watch?v=1j5WnqwMdCk Khan Academy Lecture on Change of Basis], from Khan Academy |

|||

{{ |

{{linear algebra}} |

||

{{Authority control}} |

|||

Revisi per 11 Maret 2024 09.39

Dalam aljabar linear,

sebarang basis terurut dari sebarang ruang vektor dengan dimensi

sebarang vektor pada ruang vektor dinyatakan secara unik oleh vektor koordinat

---

basis terurut memungkinkan setiap/sembarang/semua elemen pada sebarang ruang vektor dim-n dinyatakan oleh vektor koordinat, yakni suatu barisan n skalar yang disebut koordinat.

---

basis sebagai acuan arah

---

<dua basis berbeda considered>, vektor koordinat yang menyatakan elemen menurut/atas basis pertama, umumnya berbeda dengan vektor koordinat yang menyatakan elemen tersebut --- basis yang lain.

---

In mathematics, an ordered basis of a vector space of finite dimension allows representing uniquely any element of the vector space by a coordinate vector, which is a sequence of n scalars called coordinates. If two different bases are considered, the coordinate vector that represents a vector on one basis is, in general, different from the coordinate vector that represents v on the other basis. A change of basis consists of converting every assertion expressed in terms of coordinates relative to one basis into an assertion expressed in terms of coordinates relative to the other basis.[1][2][3]

Such a conversion results from the change-of-basis formula which expresses the coordinates relative to one basis in terms of coordinates relative to the other basis. Using matrices, this formula can be written

where "old" and "new" refer respectively to the firstly defined basis and the other basis, and are the column vectors of the coordinates of the same vector on the two bases, and is the change-of-basis matrix (also called transition matrix), which is the matrix whose columns are the coordinates of the new basis vectors on the old basis.

This article deals mainly with finite-dimensional vector spaces. However, many of the principles are also valid for infinite-dimensional vector spaces.

Change of basis formula

Let be a basis of a finite-dimensional vector space V over a field F.[a]

For j = 1, ..., n, one can define a vector wj by its coordinates over

Let

be the matrix whose jth column is formed by the coordinates of wj. (Here and in what follows, the index i refers always to the rows of A and the while the index j refers always to the columns of A and the such a convention is useful for avoiding errors in explicit computations.)

Setting one has that is a basis of V if and only if the matrix A is invertible, or equivalently if it has a nonzero determinant. In this case, A is said to be the change-of-basis matrix from the basis to the basis <ANTON><FRIEDBERG?>

Given a vector let be the coordinates of over and its coordinates over that is

(One could take the same summation index for the two sums, but choosing systematically the indexes i for the old basis and j for the new one makes clearer the formulas that follows, and helps avoiding errors in proofs and explicit computations.)

The change-of-basis formula expresses the coordinates over the old basis in term of the coordinates over the new basis. With above notation, it is

In terms of matrices, the change of basis formula is

where and are the column vectors of the coordinates of z over and respectively.

Proof: Using the above definition of the change-of basis matrix, one has

As the change-of-basis formula results from the uniqueness of the decomposition of a vector over a basis.

Example

Consider the Euclidean vector space Its standard basis consists of the vectors and If one rotates them by an angle of t, one gets a new basis formed by and

So, the change-of-basis matrix is

The change-of-basis formula asserts that, if are the new coordinates of a vector then one has

That is,

This may be verified by writing

In terms of linear maps

Normally, a matrix represents a linear map, and the product of a matrix and a column vector represents the function application of the corresponding linear map to the vector whose coordinates form the column vector. The change-of-basis formula is a specific case of this general principle, although this is not immediately clear from its definition and proof.

When one says that a matrix represents a linear map, one refers implicitly to bases of implied vector spaces, and to the fact that the choice of a basis induces an isomorphism between a vector space and Fn, where F is the field of scalars. When only one basis is considered for each vector space, it is worth to leave this isomorphism implicit, and to work up to an isomorphism. As several bases of the same vector space are considered here, a more accurate wording is required.

Let F be a field, the set of the n-tuples is a F-vector space whose addition and scalar multiplication are defined component-wise. Its standard basis is the basis that has as its ith element the tuple with all components equal to 0 except the ith that is 1.

A basis of a F-vector space V defines a linear isomorphism by

Conversely, such a linear isomorphism defines a basis, which is the image by of the standard basis of

Let be the "old basis" of a change of basis, and the associated isomorphism. Given a change-of basis matrix A, one could consider it the matrix of an endomorphism of Finally, define

(where denotes function composition), and

A straightforward verification shows that this definition of is the same as that of the preceding section.

Now, by composing the equation with on the left and on the right, one gets

It follows that, for one has

which is the change-of-basis formula expressed in terms of linear maps instead of coordinates.

Function defined on a vector space

A function that has a vector space as its domain is commonly specified as a multivariate function whose variables are the coordinates on some basis of the vector on which the function is applied.

When the basis is changed, the expression of the function is changed. This change can be computed by substituting the "old" coordinates for their expressions in terms of the "new" coordinates. More precisely, if f(x) is the expression of the function in terms of the old coordinates, and if x = Ay is the change-of-base formula, then f(Ay) is the expression of the same function in terms of the new coordinates.

The fact that the change-of-basis formula expresses the old coordinates in terms of the new one may seem unnatural, but appears as useful, as no matrix inversion is needed here.

As the change-of-basis formula involves only linear functions, many function properties are kept by a change of basis. This allows defining these properties as properties of functions of a variable vector that are not related to any specific basis. So, a function whose domain is a vector space or a subset of it is

- a linear function,

- a polynomial function,

- a continuous function,

- a differentiable function,

- a smooth function,

- an analytic function,

if the multivariate function that represents it on some basis—and thus on every basis—has the same property.

This is specially useful in the theory of manifolds, as this allows extending the concepts of continuous, differentiable, smooth and analytic functions to functions that are defined on a manifold.

Linear maps

Consider a linear map T: W → V from a vector space W of dimension n to a vector space V of dimension m. It is represented on "old" bases of V and W by a m×n matrix M. A change of bases is defined by an m×m change-of-basis matrix P for V, and an n×n change-of-basis matrix Q for W.

On the "new" bases, the matrix of T is

This is a straightforward consequence of the change-of-basis formula.

Endomorphisms

Endomorphisms, are linear maps from a vector space V to itself. For a change of basis, the formula of the preceding section applies, with the same change-of-basis matrix on both sides of the formula. That is, if M is the square matrix of an endomorphism of V over an "old" basis, and P is a change-of-basis matrix, then the matrix of the endomorphism on the "new" basis is

As every invertible matrix can be used as a change-of-basis matrix, this implies that two matrices are similar if and only if they represent the same endomorphism on two different bases.

Bilinear forms

A bilinear form on a vector space V over a field F is a function V × V → F which is linear in both arguments. That is, B : V × V → F is bilinear if the maps and are linear for every fixed

The matrix B of a bilinear form B on a basis (the "old" basis in what follows) is the matrix whose entry of the ith row and jth column is B(i, j). It follows that if v and w are the column vectors of the coordinates of two vectors v and w, one has

where denotes the transpose of the matrix v.

If P is a change of basis matrix, then a straightforward computation shows that the matrix of the bilinear form on the new basis is

A symmetric bilinear form is a bilinear form B such that for every v and w in V. It follows that the matrix of B on any basis is symmetric. This implies that the property of being a symmetric matrix must be kept by the above change-of-base formula. One can also check this by noting that the transpose of a matrix product is the product of the transposes computed in the reverse order. In particular,

and the two members of this equation equal if the matrix B is symmetric.

If the characteristic of the ground field F is not two, then for every symmetric bilinear form there is a basis for which the matrix is diagonal. Moreover, the resulting nonzero entries on the diagonal are defined up to the multiplication by a square. So, if the ground field is the field of the real numbers, these nonzero entries can be chosen to be either 1 or –1. Sylvester's law of inertia is a theorem that asserts that the numbers of 1 and of –1 depends only on the bilinear form, and not of the change of basis.

Symmetric bilinear forms over the reals are often encountered in geometry and physics, typically in the study of quadrics and of the inertia of a rigid body. In these cases, orthonormal bases are specially useful; this means that one generally prefer to restrict changes of basis to those that have an orthogonal change-of-base matrix, that is, a matrix such that Such matrices have the fundamental property that the change-of-base formula is the same for a symmetric bilinear form and the endomorphism that is represented by the same symmetric matrix. The Spectral theorem asserts that, given such a symmetric matrix, there is an orthogonal change of basis such that the resulting matrix (of both the bilinear form and the endomorphism) is a diagonal matrix with the eigenvalues of the initial matrix on the diagonal. It follows that, over the reals, if the matrix of an endomorphism is symmetric, then it is diagonalizable.

See also

- Active and passive transformation

- Covariance and contravariance of vectors

- Integral transform, the continuous analogue of change of basis.

Notes

- ^ Although a basis is generally defined as a set of vectors (for example, as a spanning set that is linearly independent), the tuple notation is convenient here, since the indexing by the first positive integers makes the basis an ordered basis.

References

- ^ (Anton 1987, hlm. 221–237)

- ^ (Beauregard & Fraleigh 1973, hlm. 240–243)

- ^ (Nering 1970, hlm. 50–52)

Bibliography

- Anton, Howard (1987), Elementary Linear Algebra (edisi ke-5th), New York: Wiley, ISBN 0-471-84819-0

- Beauregard, Raymond A.; Fraleigh, John B. (1973), A First Course In Linear Algebra: with Optional Introduction to Groups, Rings, and Fields

, Boston: Houghton Mifflin Company, ISBN 0-395-14017-X

, Boston: Houghton Mifflin Company, ISBN 0-395-14017-X - Nering, Evar D. (1970), Linear Algebra and Matrix Theory (edisi ke-2nd), New York: Wiley, LCCN 76091646

External links

- MIT Linear Algebra Lecture on Change of Basis, from MIT OpenCourseWare

- Khan Academy Lecture on Change of Basis, from Khan Academy